The Economic Migration Barometer Series: A Survey Translation Case Study Episode 4 – Linguistic Quality Assurance and Optical Check

by Steve Dept, cApStAn CEO

Readers of this informative series on good practice in survey translation are aware that the Economic Migration Barometer is a fictitious project, which we set up for the exclusive purpose of the series. The purpose is to illustrate the complexity of survey translation and the added value of a robust linguistic quality assurance design. This is the 4th issue in this series, and it takes a closer look at the components of linguistic quality assurance that take place after the actual translation, including the optical check, particularly important in a computer-assisted personal interview (CAPI) survey.

Our imaginary Economic Migration Barometer (EMB) collects data on attitudes towards economic migration in countries from which people are known to travel abroad to search for work opportunities. If you read the first 3 episodes, you will know that in this project we were fortunate enough to be given the opportunity to implement good practices. There were preliminary meetings between the client’s platform engineer and cApStAn’s translation technologist (Episode 3). Translation memories were generated from existing translations (Episode 1) of a previous wave from the survey. There was a translatability assessment (Episode 2), and clear question by question translation and adaptation notes were developed. There was a well-designed translation workflow, and robust tools were used. Is that enough to ensure that the 12 target language versions of the survey are valid and comparable? Perhaps it is. It should be. However, one can’t be sure: no survey translation process is complete without linguistic quality assurance and equivalence checks.

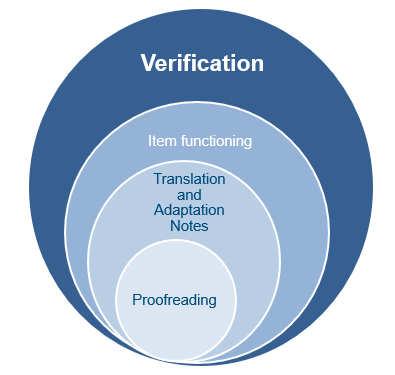

We set up a two-tier linguistic quality assurance design: a translation verification and an optical check. The scope of verification is broader than a linguistic review. Proofreading skills are but a modest subset of the skills deployed by a verifier, who needs to strike the right balance between faithfulness to the source and fluency in the target version.

So, what is Translation Verification?

The trained verifiers focussed on linguistic equivalence, which is a proxy for functional equivalence. They performed a thorough, segment-by-segment equivalence check between the target version and the source versions of the survey questions and used cApStAn’s set of 14 verifier intervention categories to report each case where the translated question was likely to be understood differently in the target region. The categories are used so that verifiers report on equivalence issues in a standardised way. For example, the <language 1> variable had been literally translated as “language” in the Dari version, while it should have been adapted to “Dari” in one option and “Pashto” in the other option. The verifier selected the “Adaptation issue” category and implemented the suggested correction directly in Dari the CAT tool—in this case, she changed the translation of “language” to “Dari” and “Pashto”—and described the rationale of the intervention in English in a dedicated comment field. This allowed us to generate reports on the type of issue reported in each language version, but also in each question across all language versions. Part of the verifier’s brief was to report on compliance with each question by question translation and adaptation note, so we also had statistics on that aspect.

Finally, when the verified translation was ready, the XLIFF files were imported back into the client’s platform, and an optical check was performed: the verifier looked at each screen, saw what the respondent would see, and checked that all imports appear correctly, that no label is truncated and that the translated version is ready for production.

Finally, when the verified translation was ready, the XLIFF files were imported back into the test delivery platform, and a final optical check was performed: the verifier looked at each screen, saw what the testee would see, and checks that all imports appeared correctly, that no label was truncated and that the translated version was ready for production. Time to go live!

Meanwhile, if you’d like to learn about how we can help you with your survey localization projects, do fill the form below, and we’ll get back to you as soon as we can.